The robot pirates

BitDepth#1295

ANYONE WHO thinks publishing on the internet is easy is likely to be in for big surprise, particularly after the profile of their web site begins to rise.

At technewstt.com, the news web site for which I serve as both editor and webmaster, I get to enjoy all the hassles of big profile publishers with none of the perks.

As a super-niche site, it doesn't win the impressive numbers generated by general interest online news publications, but I do get the professional attention of hackers, whose efforts briefly yielded fruit a month ago, creating a flood of spam from my contact page.

It's odd seeing your e-mail client filling with spam from your own e-mail. Fixing that was a quick two-step process, adding a digital lure for automated bots and upgrading the Google Captcha used on the site.

Bots, as they are commonly called, are bits of code that search the web for vulnerabilities on web sites.

They are an integral part of the architecture of the internet, first created to assess the size and number of interconnected computers that comprised the earliest versions of the global network.

The code was improved to become the web crawlers that constantly search the internet for changes to add to search-engine databases, but as with most code created to be useful, they can also be a nuisance.

The latest irritation comes in the form of content scrapers, code that searches for new posts fulfilling certain criteria, copies it and uses it to pad dummy web sites set up to attract viewers who will be served programmatic advertising.

Traditional anti-copy tools don't work with code-level theft.

I usually find out when an automatic pingback to internal links to stories on my site embedded in the original story request approval for the link.

That's usually when I discover that the story has been stolen.

Is this fair? No.

Is this annoying? Oh yes.

For Grant Taylor, managing director of Newsday, the constant leak of pirated stories is a source of frustration.

"The hardest thing is identifying who is behind these web sites," Taylor said.

"Because it's only a copyright issue, you can't get the police involved. It isn't a criminal matter, so the mechanisms to deal with it just aren't that strong."

Much of the most fretful piracy of Newsday stories thrives on Facebook, where "news" pages offer up copied stories in their entirety.

"Some of them might put a link to the site, but who's going to click on that when the whole story is there.

"I wouldn't mind if it was a short excerpt and a link, but..."

While that's an accepted form of linking back to a story, even that is steadily being deprecated in value, as many readers are content to skim a headline and paragraph and consider the story read (http://ow.ly/flrb50EcNlO).

The TechNewsTT experience with professional content scrapers is disturbingly thorough. Content is taken whole and attributed to an obviously fake author on the destination site in an automated operation.

Anyone willing to run a web site off stolen intellectual property isn't, as you might expect, going to respond to e-mails of protest.

If I decide to take action, I’ve taken one pre-emptive step by installing WordProof, a tool that identifies first authorship by logging an uneraseable token in the Eos blockchain on publication.

As a defensive measure, its only value is after legal action is taken. While Google's search algorithms tend to be smart about first publication, authorship still counts for very little in the climate of wanton sharing that prevails on social media.

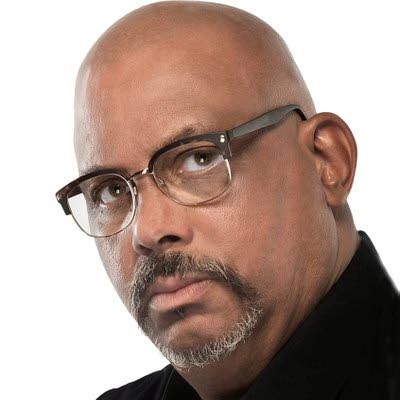

Mark Lyndersay is the editor of technewstt.com. An expanded version of this column can be found there

Comments

"The robot pirates"