Why Facebook's 'transparency' should worry you

BitDepth#1317

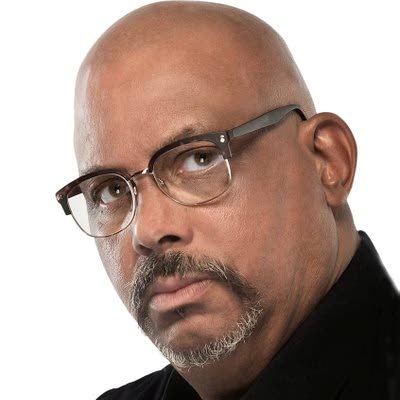

MARK LYNDERSAY

FACEBOOK recently issued its first quarterly transparency document, the Widely Viewed Content Report, covering posts in the US for the second quarter of 2021, but it wasn't the first report that the company produced.

The first was an analysis of Q1 2021, and the social media company didn't like the results.

After the New York Times (NYT) reported the quashing of the first transparency report, a PDF of its findings was released.

Both reports seem largely innocuous, listing top posts and popular domains and links, heavily populated by feel-good video posts and avuncular memes.

Facebook argues that posts with the most views during Q2 attracted just 0.1 per cent of the volume of views on its social media service.

Posts with a link to external content were in further minority, representing just 12.9 per cent of news feed content in the US.

But it's important to consider what Facebook is not reporting in its public analysis of content.

The company is not being transparent about the quantity of hate speech, disinformation and political gerrymandering it has identified on its platform, neither has it explained what it has done to limit the visibility and spread of such posts.

CrowdTangle, a Facebook content analysis tool, was used by the NYT to understand the spread of a misinformation article by Joseph Mercola, an osteopathic physician in Florida whose false anti-vaccination claims reached more than 400,000 people.

Mercola tops the Disinformation Dozen, who posts 65 per cent of all anti-vaccine social media messages.

Withholding its first transparency report isn't the only action that Facebook has taken to limit access to its inner workings.

In April, the NYT reported that CrowdTangle, which has operated semi-autonomously within Facebook since 2016, was broken up and added to the social network's integrity team.

Roy Austin Jr, the son of a popular former US ambassador to TT, is now Facebook's VP of civil rights and deputy general counsel.

He spoke at Amcham's Tech Summit on July 6 in a session on "Tech as a force for good."

"I see my job as two things, ensure that Facebook does no harm and see that Facebook does good," Austin said.

"If we can help mitigate the harm or concerns around issues such as hate speech, false information and bias, Facebook's stated goal of building a global community that works for all of us comes closer to a reality,"

Such optimistic views are not uncommon among Facebook's employees and the company vigorously positions itself as a neutral, benign platform for public conversation and comment.

In some ways, it is exactly that, and the toxic environments that flare up on its pages are often an accurate if depressing barometer of unpalatable public thought and sentiment.

The company's enthusiasm to be seen as a good guy often makes it seem to be an overcompensating villain.

Unfortunately, because Facebook is also a walled garden, its stated intentions cannot be compared to its actual practice, which is opaque to most observers.

Facebook will, undoubtedly, argue that such restrictions are necessary after the humiliations of Cambridge Analytica, but the company cannot aspire to transparency without offering a more comprehensive, publicly accessible analytical view of its content and behaviour as a business.

If you don't like Facebook, you don't have to join, but it's hard to ignore the influence and power of a publishing platform that brings communication tools to a third of the global population.

By any reasonable measurement of the term, Facebook is a publisher, but it's one that continues to deny both that role and the responsibility of sharing information on a global scale.

Mark Lyndersay is the editor of technewstt.com. An expanded version of this column can be found there

Comments

"Why Facebook’s ‘transparency’ should worry you"